Integrating Confluent Cloud with ClickHouse

Prerequisites

We assume you are familiar with:

- ClickHouse Connector Sink

- Confluent Cloud and Custom Connectors.

The official Kafka connector from ClickHouse with Confluent Cloud

Installing on Confluent Cloud

This is meant to be a quick guide to get you started with the ClickHouse Sink Connector on Confluent Cloud. For more details, please refer to the official Confluent documentation.

Create a Topic

Creating a topic on Confluent Cloud is fairly simple, and there are detailed instructions here.

Important Notes

- The Kafka topic name must be the same as the ClickHouse table name. The way to tweak this is by using a transformer (for example ExtractTopic).

- More partitions does not always mean more performance - see our upcoming guide for more details and performance tips.

Install Connector

You can download the connector from our repository - please feel free to submit comments and issues there as well!

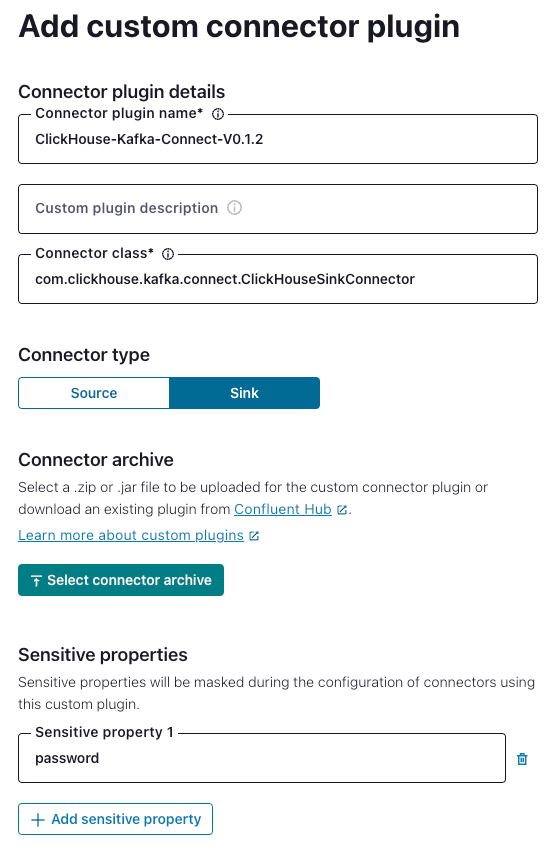

Navigate to “Connector Plugins” -> “Add plugin” and using the following settings:

'Connector Class' - 'com.clickhouse.kafka.connect.ClickHouseSinkConnector'

'Connector type' - Sink

'Sensitive properties' - 'password'. This will ensure entries of the ClickHouse password are masked during configuration.

Example:

Gather your connection details

To connect to ClickHouse with HTTP(S) you need this information:

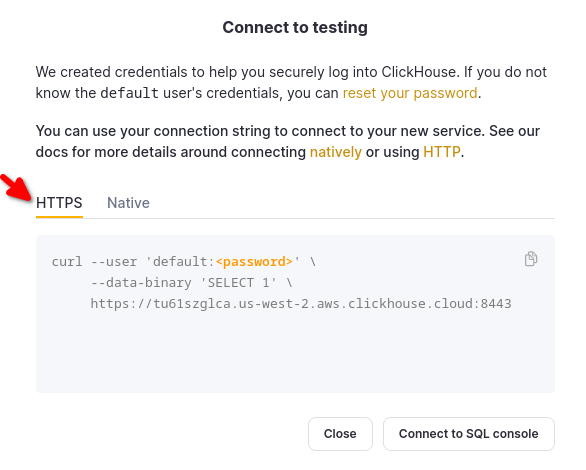

The HOST and PORT: typically, the port is 8443 when using TLS or 8123 when not using TLS.

The DATABASE NAME: out of the box, there is a database named

default, use the name of the database that you want to connect to.The USERNAME and PASSWORD: out of the box, the username is

default. Use the username appropriate for your use case.

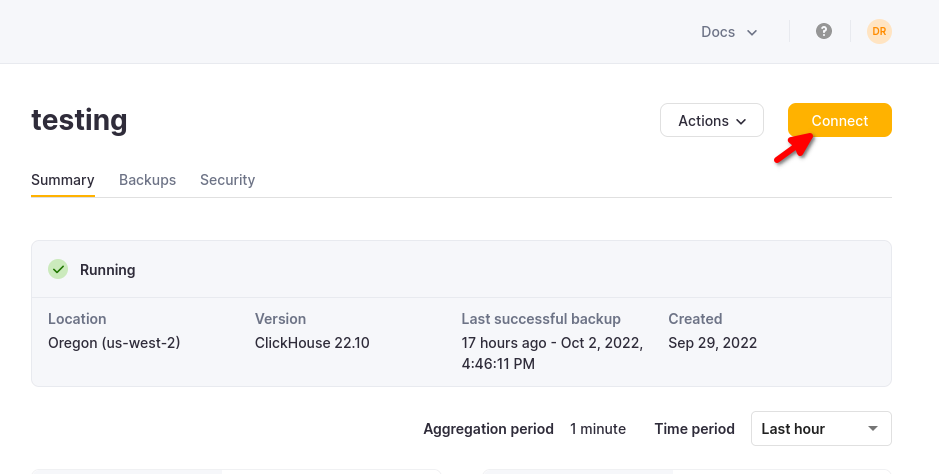

The details for your ClickHouse Cloud service are available in the ClickHouse Cloud console. Select the service that you will connect to and click Connect:

Choose HTTPS, and the details are available in an example curl command.

If you are using self-managed ClickHouse, the connection details are set by your ClickHouse administrator.

Configure the Connector

Navigate to Connectors -> Add Connector and use the following settings (note that the values are examples only):

{

"database": "<DATABASE_NAME>",

"errors.retry.timeout": "30",

"exactlyOnce": "false",

"schemas.enable": "false",

"hostname": "<CLICKHOUSE_HOSTNAME>",

"password": "<SAMPLE_PASSWORD>",

"port": "8443",

"ssl": "true",

"topics": "<TOPIC_NAME>",

"username": "<SAMPLE_USERNAME>",

"key.converter": "org.apache.kafka.connect.storage.StringConverter",

"value.converter": "org.apache.kafka.connect.json.JsonConverter",

"value.converter.schemas.enable": "false"

}

Specify the connection endpoints

You need to specify the allow-list of endpoints that the connector can access.

You must use a fully-qualified domain name (FQDN) when adding the networking egress endpoint(s).

Example: u57swl97we.eu-west-1.aws.clickhouse.com:8443

You must specify HTTP(S) port. The Connector doesn't support Native protocol yet.

You should be all set!

Known Limitations

- Custom Connectors must use public internet endpoints. Static IP addresses aren't supported.

- You can override some Custom Connector properties. See the fill list in the official documentation.

- Custom Connectors are available only in some AWS regions

- See the list of Custom Connectors limitations in the official docs